泰州網(wǎng)站開發(fā)搜索引擎搜索

總結(jié):只有jieba,cutword,baidu lac成功將色盲色弱成功分對(duì),這兩個(gè)庫字典應(yīng)該是最全的

hanlp[持續(xù)更新中]

https://github.com/hankcs/HanLP/blob/doc-zh/plugins/hanlp_demo/hanlp_demo/zh/tok_stl.ipynb

import hanlp

# hanlp.pretrained.tok.ALL # 語種見名稱最后一個(gè)字段或相應(yīng)語料庫tok = hanlp.load(hanlp.pretrained.tok.COARSE_ELECTRA_SMALL_ZH)

# coarse和fine模型訓(xùn)練自9970萬字的大型綜合語料庫,覆蓋新聞、社交媒體、金融、法律等多個(gè)領(lǐng)域,是已知范圍內(nèi)全世界最大的中文分詞語料庫# tok.dict_combine = './data/dict.txt'

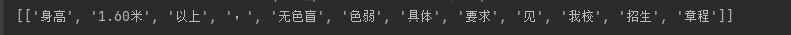

print(tok(['身高1.60米以上,無色盲色弱具體要求見我校招生章程']))

pkuseg[不再維護(hù)了]

https://github.com/lancopku/pkuseg-python

下載最新模型

import pkuseg

c = pkuseg.pkuseg(model_name=r'C:\Users\ymzy\.pkuseg\default_v2') #指定模型路徑加載,如果只寫模型名稱,會(huì)報(bào)錯(cuò)[Errno 2] No such file or directory: 'default_v2\\unigram_word.txt'

# c = pkuseg.pkuseg(user_dict=dict_path,model_name=r'C:\Users\ymzy\.pkuseg\default_v2') #, postag = True

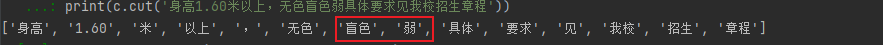

print(c.cut('身高1.60米以上,無色盲色弱具體要求見我校招生章程'))

jieba[不再維護(hù)了]

https://github.com/fxsjy/jieba

HMM中文分詞原理

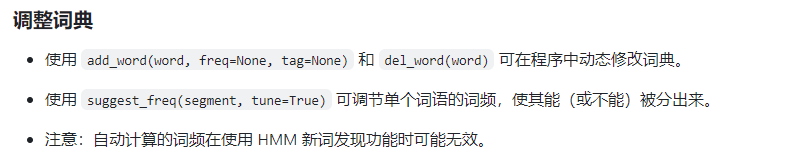

import jieba# jieba.load_userdict(file_name)

sentence = '身高1.60米以上,無色盲色弱具體要求見我校招生章程'

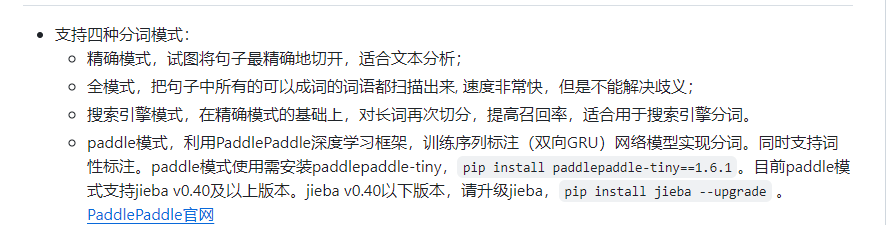

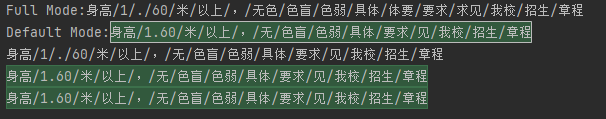

#jieba分詞有三種不同的分詞模式:精確模式、全模式和搜索引擎模式:

seg_list = jieba.cut(sentence, cut_all=True) #全模式

print("Full Mode:" + "/".join(seg_list))

seg_list = jieba.cut(sentence, cut_all=False) #精確模式

print("Default Mode:" + "/".join(seg_list))

seg_list = jieba.cut(sentence, HMM=False) #不使用HMM模型

print("/".join(seg_list))

seg_list = jieba.cut(sentence, HMM=True) #使用HMM模型

print("/".join(seg_list))

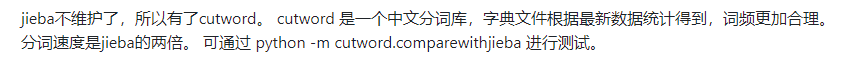

cutword[202401最新]

https://github.com/liwenju0/cutword

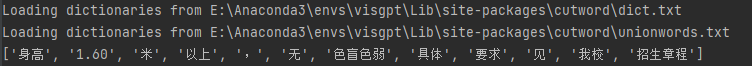

from cutword import Cuttercutter = Cutter(want_long_word=True)

res = cutter.cutword("身高1.60米以上,無色盲色弱具體要求見我校招生章程")

print(res)

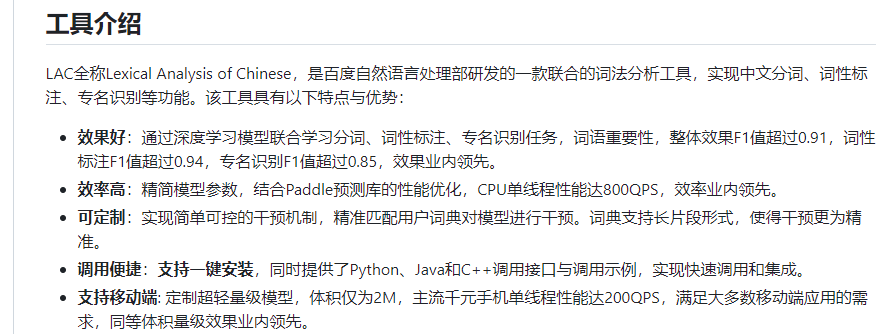

lac【不再維護(hù)】

https://github.com/baidu/lac

from LAC import LAC# 裝載分詞模型

seg_lac = LAC(mode='seg')

seg_lac.load_customization('./dictionary/dict.txt', sep=None)texts = [u"身高1.60米以上,無色盲色弱具體要求見我校招生章程"]

seg_result = seg_lac.run(texts)

print(seg_result)